When a Spark session is running, you can view the details through UI portal. Spark SQL interactive window can be run through this command: spark-sqlĪs I have not configured Hive in my system, thus there will be error when I run the above command. Run the following command to try PySpark: pyspark %SPARK_HOME%\bin\run-example.cmd SparkPi 10 You can use Scala in this interactive window.Įxecute the following command in Command Prompt to run one example provided as part of Spark installation (class SparkPi with param 10). The screen should be similar to the following screenshot: Run the following command in Command Prompt to verify the installation.

APACHE SPARK FOR WINDOWS 10 VERIFICATION

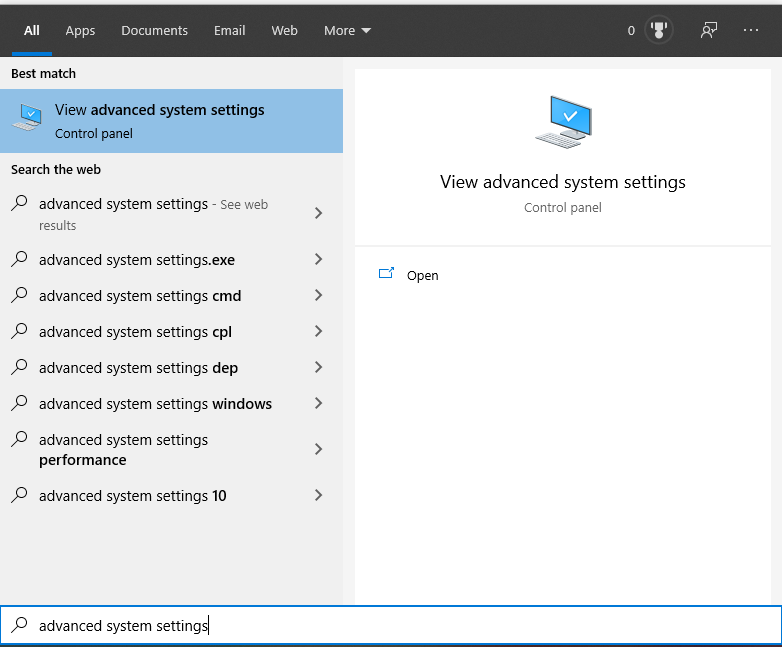

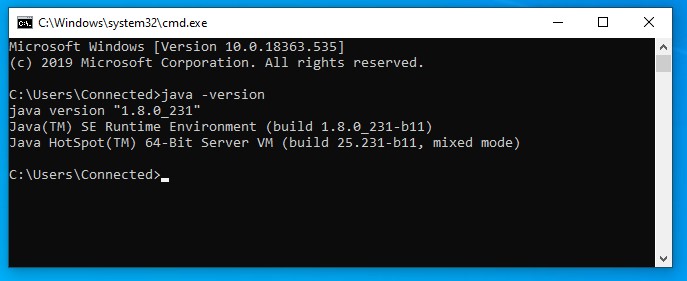

Let's run some verification to ensure the installation is completed without errors. Open nf file and add the following entries: localhost Run the following command to create a default configuration file: cp %SPARK_HOME%/conf/ %SPARK_HOME%/conf/nf Setup an environment variable SPARK_DIST_CLASSPATH accordingly using the output: Run the following command in Command Prompt to find out existing Hadoop classpath: F:\big-data>hadoop classpathį:\big-data\hadoop-3.3.0\etc\hadoop F:\big-data\hadoop-3.3.0\share\hadoop\common F:\big-data\hadoop-3.3.0\share\hadoop\common\lib\* F:\big-data\hadoop-3.3.0\share\hadoop\common\* F:\big-data\hadoop-3.3.0\share\hadoop\hdfs F:\big-data\hadoop-3.3.0\share\hadoop\hdfs\lib\* F:\big-data\hadoop-3.3.0\share\hadoop\hdfs\* F:\big-data\hadoop-3.3.0\share\hadoop\yarn F:\big-data\hadoop-3.3.0\share\hadoop\yarn\lib\* F:\big-data\hadoop-3.3.0\share\hadoop\yarn\* F:\big-data\hadoop-3.3.0\share\hadoop\mapreduce\* If your package type already includes pre-built Hadoop libraries, you don't need to do this. This is only required if you configure Spark with an existing Hadoop. Setup SPARK_HOME environment variable with value of your spark installation directory.Īdded ‘%SPARK_HOME%\bin’ to your PATH environment variable.Ĥ) Configure Spark variable SPARK_DIST_CLASSPATH. The variable value points to your Java JDK location. Setup environment variable JAVA_HOME if it is not done yet. Spark 3.0 files are now extracted to F:\big-data\spark-3.0.0. Warning Your file name might be different from spark-3.0.0-bin-without-hadoop.tgz if you chose a package with pre-built Hadoop libs. $ tar -C spark-3.0.0 -xvzf spark-3.0.0-bin-without-hadoop.tgz -strip 1 Open Git Bash, and change directory ( cd) to the folder where you save the binary package and then unzip using the following commands: $ mkdir spark-3.0.0

If you are saving the file into a different location, remember to change the path in the following steps accordingly. In my case, I am saving the file to folder: F:\big-data. Save the latest binary to your local drive. You can choose the package with pre-built for Hadoop 3.2 or later. I already have Hadoop 3.3.0 installed in my system, thus I selected the following:

APACHE SPARK FOR WINDOWS 10 INSTALL

Install Hadoop 3.3.0 on Windows 10 Step by Step Guide Download binary package To work with Hadoop, you can configure a Hadoop single node cluster following this article: Thus path C:\Users\Raymond\AppData\Local\Programs\Python\Python38-32 is added to PATH variable. If python command cannot be directly invoked, please check PATH environment variable to make sure Python installation path is added:įor example, in my environment Python is installed at the following location: Ģ) Verify installation by running the following command in Command Prompt or PowerShell: python -version Follow these steps to install Python.ġ) Download and install python from this web page.

If Java 8/11 is available in your system, you don't need install it again. Step 4 - (Optional) Java JDK installation You can install Java JDK 8 based on the following section. Run the installation wizard to complete the installation. Tools and Environmentĭownload the latest Git Bash tool from this page. This article summarizes the steps to install Spark 3.0 on your Windows 10 environment. The highlights of features include adaptive query execution, dynamic partition pruning, ANSI SQL compliance, significant improvements in pandas APIs, new UI for structured streaming, up to 40x speedups for calling R user-defined functions, accelerator-aware scheduler and SQL reference documentation. Spark 3.0.0 was release on 18th June 2020 with many new features.

0 kommentar(er)

0 kommentar(er)